Just another quick post today about possibly speeding up data loads to an ASO database when loading from SQL. I got on a quick call with a former colleague that was looking to gain a little more performance on their load process to a massive ASO database, and the first thing that jumped out at me was that I recall you can do parallel loads with some native MaxL syntax.

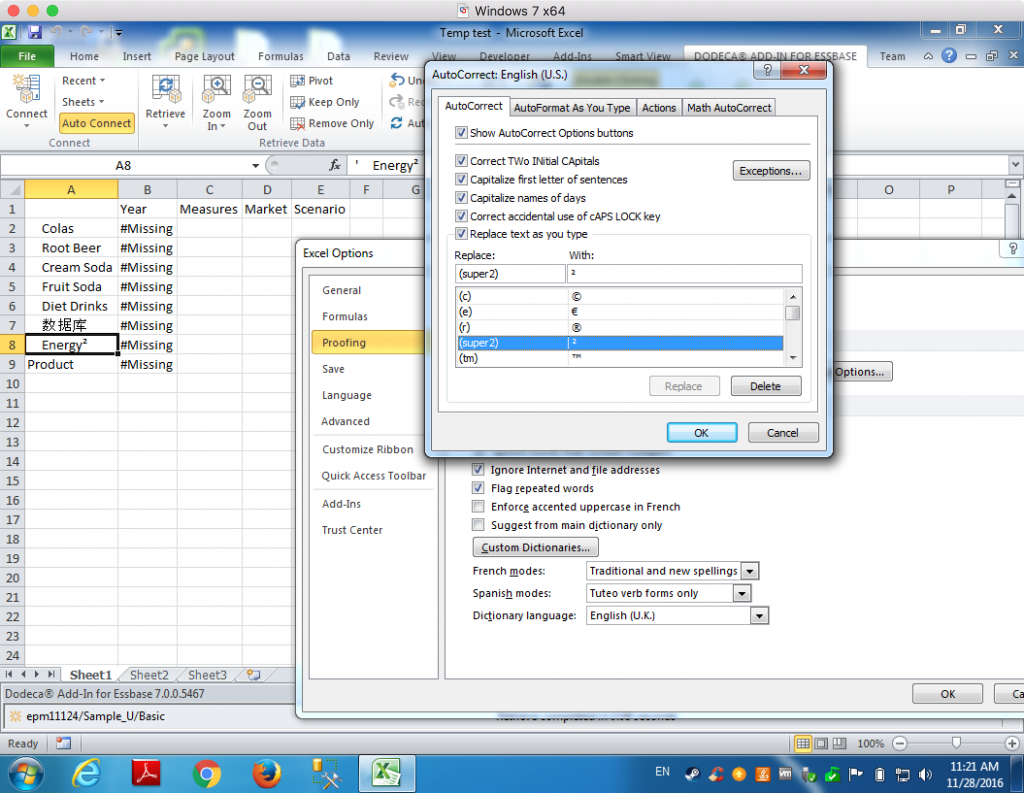

Here’s a quick example of the syntax:

import database $APPLICATION.$DATABASE data connect as $SQL_USER identified by $SQL_PW using multiple rules_file $RULE1, $RULE2, $RULE3, $RULE4, $RULE5 to load_buffer_block starting with buffer_id 100 on error write to "errors.txt";

Basically, you provide multiple rules files (configured for your SQL datasource of course). The rules files are likely to be the same as each other but I suppose it’s possible you might want to partition the data in some logical way to try and speed things up even more.

For example, let’s say that in the code above, we are loading five years of data from a relational database. We might then make it so that each rule is set for this particular year by doing the following things:

- Set the year in the data header

- Remove that column from the list of

SELECTcolumns - Put a filter/predicate in the

WHEREclause on the query - Bonus points for using substitution variables in both the header definition and the where clause

Performance in this particular use case went up substantially. It’s my understanding that data loads that were taking an hour are now cut down to 17 minutes. Your mileage may vary, of course.

Let’s Not Forget About Hybrid BSO

That said, I think this can be an effective strategy for trying to squeeze performance out of some ASO cubes that need a smaller load window and you don’t want to go changing a lot of the internals in play. If you’re doing new development, then I strongly, strongly recommend using hybrid BSO (or rather, BSO and making sure the cube is configured properly so as to get the hybrid BSO performance benefits). I have been seeing hybrid BSO cubes absolutely killing it in performance, what with their ability to leverage ASO technology for aggregates, and massive calculation improvements owing to the smaller block sizes and indexes you get from having so many dynamic calc members in dimensions. Plus, you of course get all of the classic/rich/awesome BSO functionality out of the box, like dynamic time series, expense tagging, time balance, and more. These were never very strong areas for ASO and often required a lot of non-optimal workarounds to make users happy.