A colleague of mine is running into an issue with substitution variables and was looking for a solution that he could use to sync values up. He thought maybe Vess would be a good fit. Vess, as I have blogged about before, is a “virtual” Essbase JDBC driver. Vess maps Essbase concepts and crams them into a typical database model. For example, Vess exposes tables that model substitution variables.

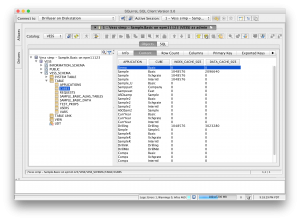

In the case of server-wide substitution variables, there is a “VARS” table that has two columns: NAME and VALUE. For each application, there is another table that contains four columns: NAME, VALUE, APPLICATION, DATABASE.

As a quick aside, this might seem a little odd to have separate tables. After all, this table is notionally about the same as the Variables screen in EAS. Well, you have to kind of flip your thinking a little. Don’t think of variables as being only either server or a cube: think about in terms of what variables are applicable to a cube. In other words, if you ask Essbase what variables are applicable to the whole server, then this would be the global variables only. If you ask Essbase what variables are applicable to a cube, then it’s the cube, app, AND the server specific variables. This is one of the reasons there are multiple tables to model the variables.

Getting back on track, given that we have these tables and we can treat them just like normal SQL tables, we can do some interesting things. Let’s say we want to create or update a variable specific to an app that exists in the global scope. We can do this in one line:

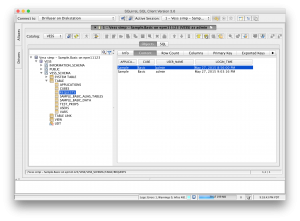

INSERT INTO SAMPLE.VARS<br /> SELECT NAME, VALUE, 'Sample', 'Basic'<br /> FROM VESS_SCHEMA.VARS WHERE NAME = 'Foo';

What’s going on here? In Vess, a schema named VESS_SCHEMA is presented for server-wide things (server wide variables are in the table VARS in this schema, as shown above). The server VARS table only has columns NAME and VALUE.

Each application on the Essbase sever is modeled as its own schema. In this case, our favorite app – Sample – gets a schema named SAMPLE. This schema also contains a VARS table (containing columns NAME, VALUE, APPLICATION, and DATABASE).

Given these tables we have, it’s a simple matter of selecting the server variable with the name we want (in this case, a variable named ‘Foo’), and insert it into the variables for the Sample app.

Of course, if we wanted to for some reason, we could alter the name using normal SQL (string truncating, substrings, etc), or whatever. We can also delete variables, such as this:

DELETE FROM SAMPLE.VARS WHERE CUBE = 'Basic';

As I’ve said before, Vess continues to be an “interesting” proof of concept. As time permits I am filling out more and more functionality. At present, Vess models things like substitution variables, metadata you might see in MaxL or EAS (cube statistics, user sessions, etc), can load data to cubes, and can do certain outline related operations.

Vess is not available as a public download at this time but I have handed a few copies out to get feedback. I think Vess is just about good enough to be used in automation and other things. If you’re interested in using this in a production situation (automation or otherwise), please contact me to discuss support options.