Beginning

I have seen various references to JExport on the Network54 forum over the years but I have never had a use for it — until now. I would like to use a cube to sort of ‘manage’ data, but the SQL backend for the data needs to be updated at the same time. I could just use a report script out of the cube and load that to the RDBMS, but I want to be able to dynamically update based on changes to the cube, rather than have to sweep the cube at certain intervals. This also plays well into the fact that Dodeca will be used as a front-end to update the data, so that when the new data is sent in (essentially as a Lock & Send operation), everything gets updated on the fly.

There doesn’t really seem to be a lot of JExport documentation out there, and I couldn’t find anything at all where people are using JExport with a SQL backend. After stumbling through things with my feeble Java skills, lots of trial and error, and numerous cups of coffee, I was able to get things all setup and working.

First of all, I had to get the necessary files. The JExport documentation labels it as “shareware” (and it is not an officially supported piece of functionality from Oracle/Hyperion), so for the time being I will simply host a zip of the files here. If it turns out someday that I have to take this link down, then so be it, but for now, here you are.

Extracting the zip revels a PDF whitepaper and another zip file. Inside the other zip you will find the following files:

- ExportCDF.jar — this is the jar file (a jar file is a zip file that contains a bunch of Java classes and some other things). You can open this up in 7-zip to view the contents.

- ExportCDF_Readme.htm — some information on installing and configuration stuff

- exportRDB.mdb — this is only necessary for demo purposes, you don’t really need it

- rdb.properties — you’ll want this for configuring the JDBC connection.

- RegisterExportCDF.msh — this is convenient to install the functions to Essbase for you (or you can do it through EAS).

- src folder — this has the source for all of the methods, you’ll need this in order to compile a new .jar yourself (like if you need to make changes to the implementation).

- Other files — there are some sample calc scripts, and a gif file (yay!)

So now what?

Following the instructions that are included is fairly straightforward. I can vouch for the fact that these work on Essbase 7.1.6 but I do not know how things may have changed under the hood for newer versions, so if it works at all, then you may need to modify them accordingly.

As the directions indicate, you need to put the ExportCDF.jar file in to $ARBORPATH/java/udf. Also put the rdb.properties in the same folder. Here’s a tip if you want to use JDBC for the exports: ALSO put the JDBC jar file in this folder. For example, the SQL Server .jar I am using is sqljdbc.jar, so I put this file in the same folder as well.

Still following the included directions, you need make some changes to your udf.policy file in the $ARBORPATH/java directory. Add the following lines:

grant codeBase "file:${essbase.java.home}/../java/udf/ExportCDF.jar" {

permission java.security.AllPermission;

};

Here’s another part where the included directions may fail you, if you are planning on using a SQL backend: ALSO add a line for your JDBC jar file. After getting some Access Denied errors during my troubleshooting process, it finally occurred to me that I needed to add a line for sqljdbc.jar too. Here’s what it looks like:

grant codeBase "file:${essbase.java.home}/../java/udf/sqljdbc.jar" {

permission java.security.AllPermission;

};

Again, change the names accordingly if you need to use different .jar files. If you have to use the DB2 trifecta of .jar files, then I imagine adding the three entries for these would work too. If you can’t access the udf.policy file because it’s in use, you may need to stop your Essbase service, edit the file, and restart the service. Now we should be all set to register the functions. The included MaxL file was pretty handy.

Be sude to change admin and password to an appropriate user on the analytic server (unless of course, you use ‘admin’ and admin’s password is ‘password’…) and run it. On a Windows server this would mean opening up the commandline, cd’ing to the directory with the MaxL script, and running ‘essmsh RegisterExportCDF.msh’. If you can’t run this from the Essbase server itself, then change localhost to the name of the server and run it from some other machine with the MaxL interpreter on it. If you go in to EAS and check out the “Functions” node under your analytic server, you should now see the three functions added there. Due to the way they’ve been added, they are in the global scope — that is, any application can access them. From here, the example calc script files included with JExport should help you find your way. I would suggest trying to get the file export method working first, as that has the least potential to not work. Here is the code (I have cleaned it up slightly for formatting issues):

/*

* Export to a text file

* arg 1: specify "file" to export to a text file

* arg 2: file name. This file name must be used to close the file after the calculation completes

* arg 3: delimiter. Accepts "tab" for tab delimited

* arg 4: leave blank when exporting to text files

* arg 5: an array of member names

* arg 6: an array of data

*/

/* Turn intelligent calc off */

SET UPDATECALC OFF

/*

* Fix on Actual so that only one scenario is evaluated, otherwise a

* record for each scenario will be written and duplicated in the export

*/

FIX ("Actual")

Sales (

IF ("variance" < 0)

@JExportTo("file","c:/flat.txt",",","",

@LIST(

@NAME(@CURRMBR(Market)),

@NAME(@CURRMBR(Product)),

@NAME(@CURRMBR(measures)),

@NAME(@CURRMBR(year))),

@LIST(actual,budget,Variance)

);

ENDIF;

}

ENDFIX

/* Close the file */

RUNJAVA com.hyperion.essbase.cdf.export.CloseTarget "file" "c:/flat.txt" ;

Note that you have to anchor the JExport function to a member — so in this case, if you try to take out Sales and the parentheses that surround the IF/JExportTo/ENDIF, EAS will bark at you for invalid syntax. The main thing going on here is that we are calling the function with the given set of parameters (hey, there actually is a use for @NAME! I kid, I kid…). For blog formatting issues, I broke the call to JExportTo up into multiple lines, but this is still syntactically correct. In the case where we are exporting to a flat file, we also call a method to close the file (this part isn’t needed for JDBC).

Feeling brave? Try and run it. If everything works as planned, you are well on your way to CDF happiness. In my case, I was aiming high and trying to get the JDBC stuff to work, but when I realized that was going nowhere, I decided to simplify things and go with the flat file approach. Among other things, it showed me that I had to fix up my member combinations a bit before it would fit into a SQL table.

But I want to use JDBC!

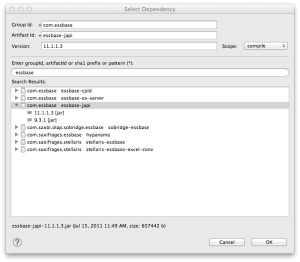

This is where you get to benefit from my pain and experience. As you saw above, you need to put the .jar file for your RDMBS in the folder I mentioned, AND you have to edit the udf.policy file for the jar(s) you add. Now you need to shore up your rdb.properties file. You can comment out everything in the file except the section you need, so in my case, I put a # in front of the DB2 entries and the Oracle entries, leaving just the TargetSQL entries.

# SQLServer entries:

TargetSQL.driver=com.microsoft.sqlserver.jdbc.SQLServerDriver

TargetSQL.url=jdbc:sqlserver://foosql.bar.com:1433;databaseName=EssUsers

TargetSQL.user=databaseuser

TargetSQL.password=databasepw

Notice that all of these entries start with “TargetSQL.” This can actually be anything you want it to be, but whatever it is, that’s how you will refer to it from your calc scripts. This means if you just have one rdb.properties file but you want to do some JExport magic with multiple SQL backends, then you just put in another section like foo.driver, foo.url, and so on. Note that the syntax I am using is explicitly calling out port 1433. This is what my little SQL Server 2000 box is using — you may need to adjust yours. Originally I did not specify my JDBC URL correctly, but make sure for SQL Server you put the databaseName parameter on the end. You could perhaps get away with not specifying the database name here and instead prefixing it to your table name in the JExport command, but this works so let’s run with it.

So what does the code look like in the calc script now? It’s the same, except we don’t need the RUNJAVA line at the end, and we change the JExportTo line to something like this:

@JExportTo("JDBC","TargetSQL","","TEST_TABLE",

@List(@NAME(@CURRMBR(Product)),@NAME(@CURRMBR(Market))),

@LIST(Actual));

The function is the same, but the parameters have changed a bit. We now tell it through the first parameter that this is a JDBC connection, and we tell it “TargetSQL” in the second parameter. This should look familiar because that’s essentially the prefix it’s looking for in the rdb.properties file.

The third parameter is blank (this was the delimiter field for text file exports). We then tell it a table name in the RDBMS to put the data in to, then we give it a list of parameters and values. In this case, the Java method quite literally creates a SQL INSERT statement that will look something like the following:

INSERT INTO TEST_TABLE VALUES ('<Product>', '<Market>', 2)

Of course, <Product> and <Market> will actually be the current member from those dimensions, and the 2 would be whatever the actual data cell is (based on the FIX statement you saw earlier, of course). Assuming you did everything correctly (and the specification for TEST_TABLE in the given database is consistent with the data you are trying to insert), everything should be all hugs and puppy dogs now.

But it’s not all hugs and puppy dogs

If, like me, you got here, but things didn’t work, you are now wondering “how in the name of all that is holy do I figure out what is wrong?” Let’s recap. We did all these things:

- Copied the .jar file to the server in the proper folder

- Copied the rdb.properties file to the same folder

- Copied any necessary .jar files for our RDBMS to the same folder

- Edited udf.policy to add all of these .jar files

- Ran the .msh file or used EAS to add the functions to the analytic server

- Stopped and started the Essbase service as needed (and one more time, just for good measure)

- Added the example code to a calc script in one of our apps

- Verified the syntax and it verified for us (no “function not defined” type of errors)

- Ran it (duh)

- Verified that everything works, at least for the .txt file output

How do we troubleshoot? If you’re like me, and don’t know Java inside and out, and know even less about how the custom defined functions are setup within Essbase, then you really have no idea how to go about this. I tried looking at the server logs and the app logs and the Essbase console itself, but I just couldn’t find where, if anywhere, the output from the ExportCDF methods would go (you know, so I could see an actual error message about what might be wrong).

So I did what any normal Essbase developer would do and I dug in and brute forced it. There are some System.out.println() commands at various places in the Export functions, so I know if I can get the output from these then I can see what the deal is. At this point, I also knew that I was able to successfully write files on the Essbase server (with the Export function to a flat file method), so, lacking any other clear method, how about I output the error messages to a file instead? This is actually pretty straightforward, but the tricky part (for me) was recreating the ExportCDF.jar file from the .java files I had.

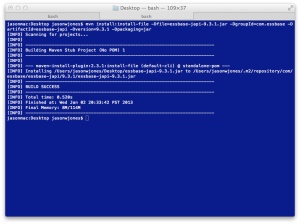

First of all, before any code changes, let’s see if we can turn the .java files into a .jar that still works on the analytic server. The source files are located in our original .zip file under the src folder. Under src/ is the com/ folder and another series of folders that represent the Java package name. Let’s start things off by putting a folder on the server somewhere so we have a place to work in. I used the server for a couple of reasons: one, I’ll be using the JDK from the server to roll the .jar file, so keeping the versions consistent will help reduce a possible area that might not work, and two, when I need to revisit this, I have all the files I need location in a place that is easy to get to, and regularly backed up. If I were a real Java master I would probably do it all on test and target the architecture to make sure they’re compatible (although just for kicks, I compiled with 1.4 and 1.6 and the output appears to be the same).

If you have some fancypants Java toolchain that can do all this for you, then you should probably do that. If you just pretend to know Java, you can get by with the following directions. After putting all of the files in a folder to work on, I ended up with a jexport/ folder containing the com/ folder hierarchy. Next, create a folder such as “test” under the jexport/ folder. This is where we will put the compiled Java files so we don’t muddy up our com/ folder hierarchy. Then we need a command to compile the .java files to .class files, and another command to roll the .class files up into a single jar. You can use the following commands, and optionally stick them in a batch file for convenience:

javac -d test -classpath %ARBORPATH%\essbase.jar com\hyperion\essbase\cdf\export\*.java

jar cf ExportCDF.jar -C test com

Note that for the classpath we need to refer to the essbase.jar file that is in our Essbase folder somewhere. Hopefully all compiles correctly and you end up with a shiny new ExportCDF.jar file. Stop the Essbase service, copy the new file to the $ARBORPATH/java/udf folder, start things up, and test that it works.

It was at this point in my own tribulations that I was quite pleased with myself for having used Java source code to create a module that Essbase can use, but I still hadn’t solved the mystery of the not-working JDBC export. And since I still don’t know how to get the output from Essbase as it executes the function, the next best thing seems to be just writing it to a file. With a little Java trickery, I can actually just map the System.out.println commands to a different stream — namely, a file on the Essbase server.

You can add the following code to the ExportTo.java file in order to do so (replacing absolute file paths as necessary):

/* Yes, there are much better ways to do this

No, I don't know what those are */

try {

FileOutputStream out = new FileOutputStream("D:/test.txt");

PrintStream ps = new PrintStream(out);

System.setOut(ps);

} catch (FileNotFoundException e) {}

It was after doing this that I discovered that in my particular case, my JDBC URL was malformed, and I got my access denied error (which was fixed by putting the sqljdbc.jar references in the udf.policy file). After all that, I fired up the JDBC test once more, and was thrilled to discover that the data that had been working fine getting exported to a flatfile, was now indeed being inserted to a SQL database! And thus concluded my two days of working this thing backwards in order to get JExport working with a SQL backend.

And now there is some JExport documentation out on the web — Happy cubing!