The Hyperion blogging-verse has been quite aflutter with the release of 11.1.2.4. So I won’t bore you with a recap on new features, since that has been done quite effectively by my esteemed colleagues. I will say, however, that by all accounts it seems to be a great release.

Oracle is really starting to hit their stride with EPM releases.

As a brief aside: I seem to be in the relative minority of EPM developers in that I come from the computer science side of things (as opposed to Finance), so believe me when I say there is a tremendous amount of energy and time spent to develop great software: writing code, testing, documenting, and more. Software is kind of this odd beast that gets more and more complex the more you add on to it.

Sometimes the best thing a developer can do is delete code and remove features, rather than add things on. This is a very natural thing to happen in software development. Removing features can result in cleaner sections of code that are faster and easier to reason about. It typically sets the stage for developing something better down the road.

In the software world there is this notion of “deprecating” features. This generally means the developer is saying “Hey, we’re leaving this feature in – for now – but we discourage you from building anything that relies on it, we don’t really support it, and there’s a good chance that we are going to remove it completely in a future release.”

With that in mind, it was with a curious eye that I read the Essbase 11.1.2.4 release notes the other day – not so much with regard to what was added, but to what was taken away (deprecated). EIS is still dead (no surprise), the Visual Basic API is a dead end (again, not a secret), some essbase.cfg settings are dead, direct I/O (I have yet to hear a success story with direct I/O…), zlib block compression is gone (I’m oddly sad about this), but interesting for now is this little tidbit: the Essbase Administration Services Java API is deprecated.

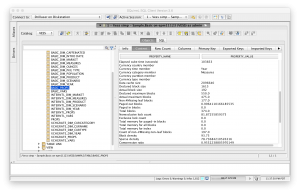

For those of you who aren’t aware, there is a normal Java API for Essbase that you may have heard of, but lurking off in the corner has been a Java API for EAS. This was a smallish API that one could use to create custom modules for EAS. It let you hook into the EAS GUI and add your own menu items and things. I played with it a little bit years ago and wrote a couple of small things for it, but nothing too fancy. As far as I know, the EAS Java API never really got used for anything major that wasn’t written by Oracle.

So, why deprecate this now? Like I said, it’s kind of Oracle’s way of saying to everyone, “Hey, don’t put resources into this, in fact, it’s going away and if you do put resources into it, and then you realize you wasted your time and money, we’re going to point to these release notes and say, hey, we told you so.”

Why is this interesting? A couple of things. One, I’m sad that I have to cross off a cool idea for a side project I had (because I’d rather not develop for something that’s being killed).

Two (and perhaps actually interesting), to me it signals that Oracle is reducing the “surface area” of EAS, as it were, so that they can more easily pivot to an EAS replacement. I’m not privy to any information from Oracle, but I see two possible roads to go down, both of which involve killing EAS:

Option 1: EAS gets reimplemented into a unified tool alongside Essbase Studio’s development environment.

Option 2: EAS functionality gets moved to the web with an ADF based front-end similar in nature to Planning’s web-based front-end.

I believe Option 2 the more likely play.

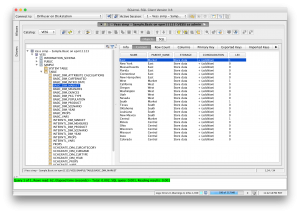

I always got the impression from the Essbase Studio development environment that it was meant to more or less absorb EAS functionality (at least, more than it actually ever did). I say this based on early screenshots I saw and my interpretation of its current functionality. Also, Essbase Studio is implemented on the same framework that Eclipse (one of the most popular Java programming environments) is, which is to say that it’s implemented on an incredibly rich, modular, flexible environment that looks good on multiple OS environments and is easy to update.

In terms of choosing a client-side/native framework to build tools on, this would be the obvious choice for Oracle to make (and again, it seems like they did make this choice some time ago, then pulled back from it).

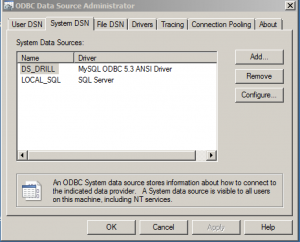

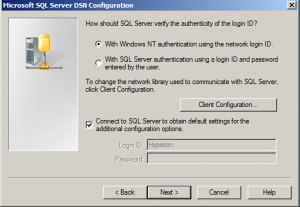

The alternative to a rich “fat client” is to go to the web. The web is a much more capable place than it was back in the Application Manager and original EAS days. My goodness, look at the Hyperion Planning and FDMEE interfaces and all the other magic that gets written with ADF. Clearly, it’s absolutely technically possible to implement the functionality that EAS provides in a web-based paradigm. Not only is it possible, but it also fits in great with the cloud.

In other words, if you’re paying whatever per month for your PBCS subscription, and you get a web-based interface to manage everything, how much of a jump is it for you to put Essbase itself in the cloud, and also have a web interface for managing that? Not much of a leap at all.