It seems that I am trucking right along in my quest to every nook and cranny of what Oracle Data Integrator has to offer (in the words of imitable Cameron Lackpour, “Wait, you use ODI for real ETL stuff?”). People always talk about ODI’s knowledge modules (KMs) and are typically referring to the workhorse RKM, LKM, and IKMs. A more exotic and less talked about KM is the SKM – Service Knowledge Module.

The idea behind an SKM is fairly straightforward. One of the fundamental building blocks in ODI is the data store – this is typically, but not always, a table in a a relational database. We end up spending a good bit of our lives figuring out how to make data go from many source data stores to a target data store.

What’s this SKM business all about? It builds a web service with typical “CRUD” operations that you can deploy. This means we end up with a web service that clients could use to add, get, and search data in data stores we have.

There are several usage scenarios for this that I can think of. One, it might be a lot more tenable to deploy web services to expose data than to expose the database itself (think network security and such). That’s probably a slam dunk use case on its own right there. Second, you might want to access data from a language that doesn’t have support for your underlying data source technology. Third, it could just be a good architectural abstraction – making your clients oblivious to the exact underlying data source, giving you the flexibility to swap it out without affecting clients.

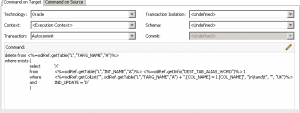

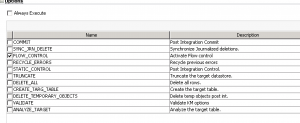

For the sake of argument, let’s say we have a dozen tables in a MySQL database and we’d like to expose a few of them as a web service. ODI Studio let’s us pick which data stores, and the SKM. Then we just define a JAX-WS server and deploy them.

In my case, I decided to deploy to a Wildfly server (aka JBoss AS). This is a JavaEE application server. It’s like WebLogic – but not like Tomcat – Tomcat is technically just a servlet container and it doesn’t contain all of the functionality that the ODI web services need (the EE part of JavaEE). In fact, I originally tried to deploy to Axis2 running under Tomcat 7 only to discover that Axis2 support has been deprecated/removed (for those not familiar, Axis2 is a project that runs in a servlet container that provides a base for exposing web services). So first note, do not use Axis2, especially if you are playing around in ODI 11, because I can’t even get it to work in ODI 12.

Other than that, ODI dutifully generates a bread and butter WAR file that can be deployed to JBoss (again, the typical deploy server is probably WebLogic but I’m a bit of a Red Hat guy so…). The ODI generated web service expects a JNDI data source to be configured. Again, for those of you not familiar, all JNDI does is makes it so that the WAR (servlet/application/web service) doesn’t need to include it’s own particular database connection details. So rather than a particular web service being hardcoded to a certain database server and using a particular username/password, it allows the application to say “Hey there mister application container, can you give me the ‘Finance’ database connection? Thanks!” and goes on its merry business. I ran into a small snag with the JNDI configuration that required me to modify the web.xml code to add in a <lookup-name> tag for my JNDI data source but other than that, the service deployed without a hitch.

So now on to the service itself: you get a traditional SOAP-based web service complete with WSDL file for consumption by clients. For easy testing you can point an application like SoapUI to the WSDL file and generate methods that you can easily test with. You can methods to add and list and filter. You can specify as much or as little data to filter on for a given entity (data store) so long as you include the primary key. Any data store in the web service exposed by the SKM must have a primary key, that’s the main catch – not that it should be to burdensome, since if you aren’t designing tables with primary keys, you might have bigger problems when it comes to using ODI…

Thoughts so far:

- Don’t use Axis2 to deploy SKMs (doesn’t work/not supported anymore)

- Wildfly (JBoss AS) does work, but seems to need a web.xml tweak for JNDI

- You might not have a JavaEE (Wildfly, WebLogic) container setup already, this could be a hurdle

- Generating the web service is pretty slick

- You get an “old school” WSDL-based web service

I actually really like how slick this can be. If you already have an application server and a set of data stores you want to expose, then boom, you can be up and running with a web service to provide access to those pretty easily. I guess the bigger question is this: is this what you want to do? Many web services are much more semantic in nature – i.e., your clients or potential clients might want more cohesive data rather than having to reach out to this table, that table, and some other thing, then combine it together into something. You wouldn’t use this, among other reasons, to expose Twitter data, for example.

As an additional thought, these SKMs have been around for quite some time – ages in the internet world. As a fairly experienced Spring developer, there have been amazing advances in things such as the Spring Data, Spring Data REST, and other technologies that allow one to build web-based create/read/update/delete operations on simple domain objects, and do it with a more modern technology stack such as to provide JSON payloads and use HTTP verbs such as POST/PUT/DELETE and so on. So personally I’d be more inclined to go in that direction to expose data than the SKM route.

But at the end of the day, if you have a set of tables you just need to expose over the web for whatever reason, this is a nice, low-investment way to accomplish that.