A very common task for Essbase automation is to move data from one cube to another. There are a number of reasons you may want or need to do this. One, you may have a cube that has detailed data and another cube with higher level data, and you want to move the sums or other calculations from one to the other. You may accept budget inputs in one cube but need to push them over to another cube. You may need to move data from a “current year” cube to a “prior year” cube (a data export or cube copy may be more appropriate, but that’s another topic). In any case, there are many reasons.

For the purposes of our discussion, the Source cube is the cube with the data already in it, and the Target cube is the cube that is to be loaded with data from the source cube. There is a simple automation strategy at the heart of all these tasks:

- Calculate the source cube (if needed)

- Run a Report script on the source cube, outputting to a file

- Load the output from the report script to the target cube with a load rule

- Calculate the target cube

This can be done by hand, of course (through EAS), or you can do what the rest of us lazy cube monkeys do, and automate it. First of all, let’s take a look at a hypothetical setup:

We will have an application/database called Source.Foo which represents our source cube. It will have dimensions and members as follows:

- Location: North, East, South, West

- Time: January, February, …, November, December

- Measures: Sales, LaborHours, LaborWages

As you can see, this is a very simple outline. For the sake of simplicity I have not included any rollups, like having “Q1/1st Quarter” for January, February, and March. For our purposes, the target cube, Target.Bar, has an outline as follows:

- Scenario: Actual, Budget, Forecast

- Time: February, …, November, December

- Measures: Sales, LaborHours, LaborWages

These outlines are similar but different. This cube has a Scenario dimension with Actual, Budget, and Forecast (whereas in the source cube, since it is for budgeting only, everything is assumed to be Budget). Also note that Target.Bar does not have a Location dimension, instead, this cube only concerns itself with totals for all regions. Looking back at our original thoughts on automation, in order for us to move the data from Source.Foo to Target.Bar, we need to calculate it (to roll-up all of the data for the Locations), run a report script that will output the data how we need it for Target.Bar, use a load rule on Target.Bar to load the data, and then calculate Target.Bar. Of course, business needs will affect the exact implementation of this operation, such as the timing, the calculation to use, and other complexities that may arise. You may actually have two cubes that don’t have a lot in common (dimensionally speaking), in which case, your load rule might need to really jump through some hoops.

We’ll keep this example really simple though. We’ll also assume that the automation is being run from a Windows server, so we have a batch file to kick things off:

cd /d %~dp0

essmsh ExportAndLoadBudgetData.msh

I use the cd /d %~dp0 on some of my systems as a shortcut to switch the to current directory, since the particular automation tool installed does not set the home directory of the file to the current working directory. Then we invoke the MaxL shell (essmsh, which is in the PATH) and run ExportAndLoadBudgetData.msh. I enjoy giving my automation files unnecessarily long filenames. It makes me feel smarter.

As you may have seen from an earlier post, I like to modularize my MaxL scripts to hide/centralize configuration settings, but again, for the sake of simplicity, this example will forgo that. Here is what ExportAndLoadBudgetData.msh could look like:

/* Copies data from the Budget cube (Source.Foo) to the Budget Scenario

of Target.Bar */

/* your very standard login sequence here */

login AdminUser identified by AdminPw on EssbaseServer;

/* at this point you may want to turn spooling on (omitted here) */

/* disable connections to the application -- this is optional */

alter application Source disable connects;

/* PrepExp is a Calc script that lives in Source.Foo and for the purposes

of this example, all it does is makes sure that the aggregations that are

to be exported in the following report script are ready. This may not be

necessary and it may be as simple as a CALC ALL; */

execute calculation Source.Foo.PrepExp;

/* Budget is the name of the report script that runs on Source.Foo and outputs a

text file that is to be read by Target.Bar's LoadBud rules file */

export database Source.Foo

using report_file 'Budget'

to data_file 'foo.txt';

/* enable connections, if they were disabled above */

alter application Source enable connects;

/* again, technically this is optional but you'll probably want it */

alter application Target disable connects;

/* this may not be necessary but the purpose of the script is to clear out

the budget data, under the assumption that we are completely reloading the

data that is contained in the report script output */

execute calculation Target.Bar.ClearBud;

/* now we import the data from the foo.txt file created earlier. Errors

(rejected records) will be sent to errors.txt */

import database Target.Bar data

from data_file 'foo.txt'

using rules_file 'LoadBud'

on error write to 'errors.txt';

/* calculate the new data (may not be necessary depending on what the input

format is, but in this example it's necessary */

execute calculation Target.Bar.CalcAll;

/* enable connections if disabled earlier */

alter application Target enable connects;

/* boilerplate cleanup. Turn off spooling if turned on earlier */

logoff;

exit;

At this point , if we don’t have them already, we would need to go design the aggregation calc script for Source.Foo (PrepExp.csc), the report script for Source.Foo (Budget.rep), the clearing calc script on Target.Bar (ClearBud.csc), the load rule on Target.Bar (LoadBud.rul), and the final rollup calc script (CalcAll.csc). Some of these may be omitted if they are not necessary for the particular process (you may opt to use the default calc script, may not need some of the aggregations, etc).

For our purposes we will just say that the PrepExp and CalcAll calc scripts are just a CALC ALL or the default calc. You may want a “tighter” calc script, that is, you may want to design the calc script to run faster by way of helping Essbase understand what you need to calculate and in what order.

What does the report script look like? We just need something to take the data in the cube and dump it to a raw text file.

<ROW ("Time", "Measures")

{ROWREPEAT}

{SUPHEADING}

{SUPMISSINGROWS}

{SUPZEROROWS}

{SUPCOMMAS}

{NOINDENTGEN}

{SUPFEED}

{DECIMAL 2}

<DIMBOTTOM "Time"

<DIMBOTTOM "Measures"

"Location"

!

Most of the commands here should be pretty self explanatory. If the syntax looks a little different than you’re used to, it’s probably because you can also jam all of the tokens in one line if you want like {ROWREPEAT SUPHEADING} but historically I’ve had them one to a line. If there were more dimensions that we needed to represent, we’d put thetm on the <ROW line. As per the DBAG, we know that the various tokens in between {}’s format the data somehow — we don’t need headings, missing rows, rows that are zero (although there are certainly cases where you might want to carry zeros over), no indentation, and numbers will have two decimal places (instead of some long scientific notation). Also, I have opted to repeat row headings (just like you can repeat row heading in Excel) for the sake of simplicity, however, as another optimization tip, this isn’t necessary either — it just makes our lives easier in terms of viewing the text file and loading it to a SQL database or such.

As I mentioned earlier, we didn’t have rollups such as different quarters in our Time dimension. That’s why we’re able to get away with using <DIMBOTTOM, but if we wanted just the Level 0 members (the months, in this case), we could use the appropriate report script. Lastly, from the Location dimension we are taking use the Location member (whereas <DIMBOTTOM “Time” tells Essbase to give us all the members to the bottom of the Time dimension, simply specifying a member or members from the dimension will give us those members), the parent to the different regions. “Location” will not actually be written in the output of the report script because we don’t need it — the outline of Target.Bar does not have a location dimension since it’s implied that it represents all locations.

The output of the report script will look similar to the following:

January Sales 234.53

January LaborHours 35.23

February Sales 532.35

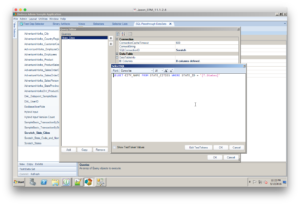

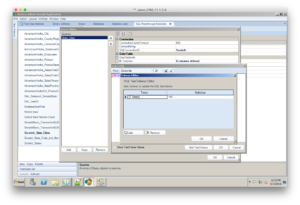

From here it is a simple matter of designing the load rule to parse the text file. In this case, the rule file is part of Target.Bar and is called LoadBud. If we’ve designed the report script ahead of time and run it to get some output, we can then go design the load rule. When the load rule is done, we should be able to run the script (and schedule it in our job scheduling software) to carry out the task in a consistent and automated manner.

As an advanced topic, there are several performance considerations that can come into play here. I already alluded to the fact that we may want to tighten up the calc scripts in order to make things faster. In small cubes this may not be worth the effort (and often isn’t), but as we have more and more data, designing the calc properly (and basing it off of good dense/sparse choices) is critical. Similarly, the performance of the report script is also subject to the dense/sparse settings, the order of the output, and other configuration settings in the app and database. In general, what you are always trying to do (performance wise) is to help the Essbase engine do it’s job better — you do this by making the tasks you want to perform more conducive to the way that Essbase processes data. In other words, the more closely you can align your data processing to the under-the-hood mechanisms of how Essbase stores and manipulates your data, the better off you’ll be. Lastly, the load rule on the Target database, and the dense/sparse configurations of the Target database, will impact the data load performance. You may not and probably will not be able to always optimize everything all at once — it’s a balancing act — since a good setting for a report script may result in suboptimal calculation process. But don’t let this scare you — try to just get it to work first and then go in and understand where the bottlenecks may be.

As always, check the DBAG for more information, it has lots of good stuff in it. And of course, try experimenting on your own, it’s fun, and the harder you have to work for knowledge, the more likely you are to retain it. Good luck out there!